Voice Controlled Gomoku

ECE 5725 Final Project, Designed By

Yuhao Lu (yl3539), Zehua Pan

(zp74)

Demonstration Video

Introduction

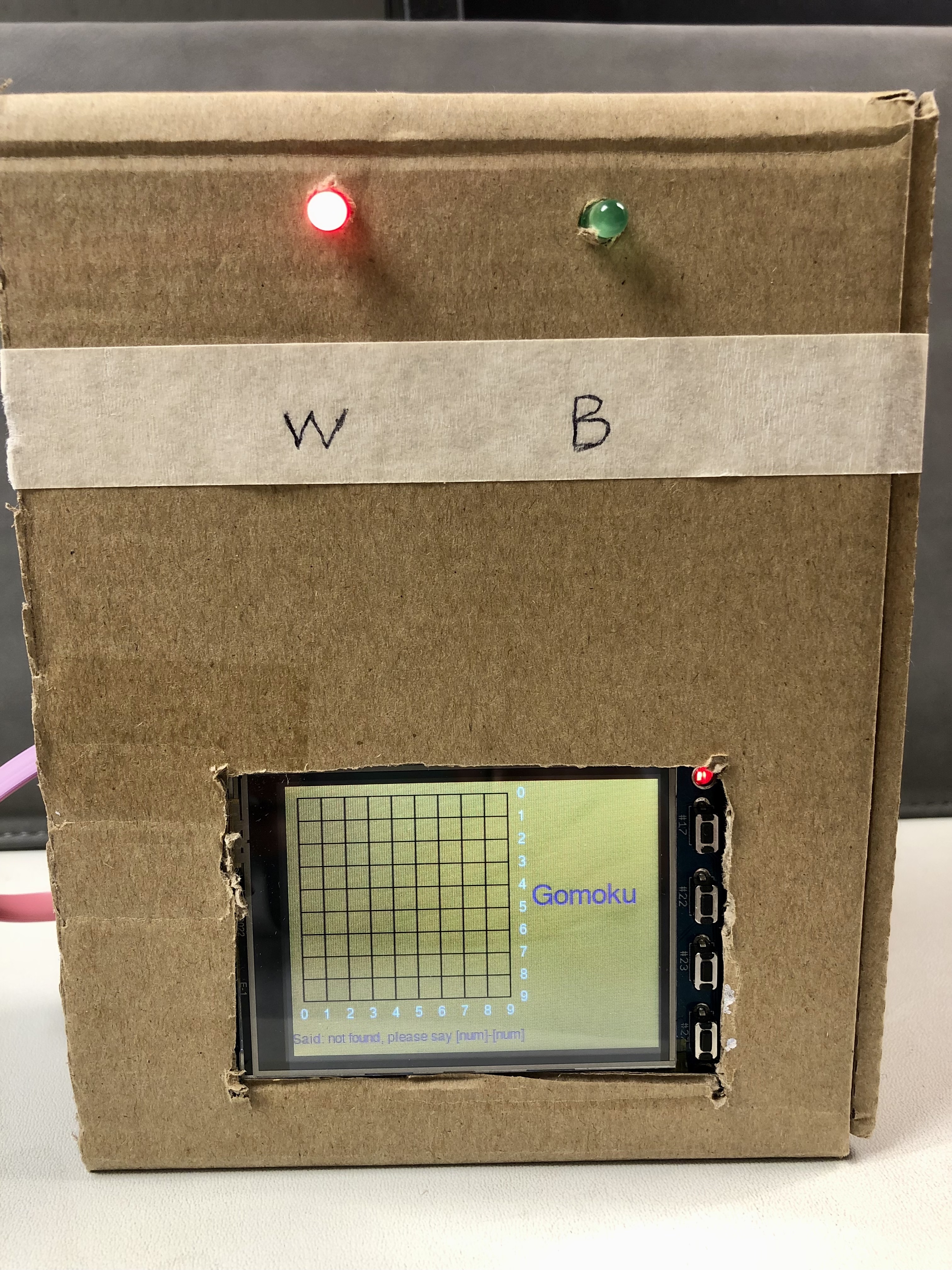

Playing games that require precise touching positions like Gomoku on a small screen often drives players who have big fingers mad. Therefore, we redesigned the Gomoku game that only requires the player’s voice. Busying with your hands but want to play Gomoku in the meantime? all you need to do is to open the game and say “[a number] dash(-) [a number]”. The game will automatically place your chess piece at the exact position you commanded on the chessboard. This hand-free game playing style brings infinite possibilities to game development. In the hardware aspect, this game sets on a Raspberry Pi and connected with a USB microphone and a PiTFT screen for displaying the Gomoku game. And two LEDs represent two players in the game, which tells players when and who should make a speech command. In the software aspect, a python-based speech recognition library and Google cloud speech API were used for speech recognition in this project. The basic Gomoku game was realized by using pygame library.

Project Objective

- Design and develop the Gomoku game

- Using speech recognition algorithm to recognize human voice

- Recognize, convert and parse human voice to command, and send command to the Gomoku game

- Make moves on the Gomoku game by using the received command

Design and Testing

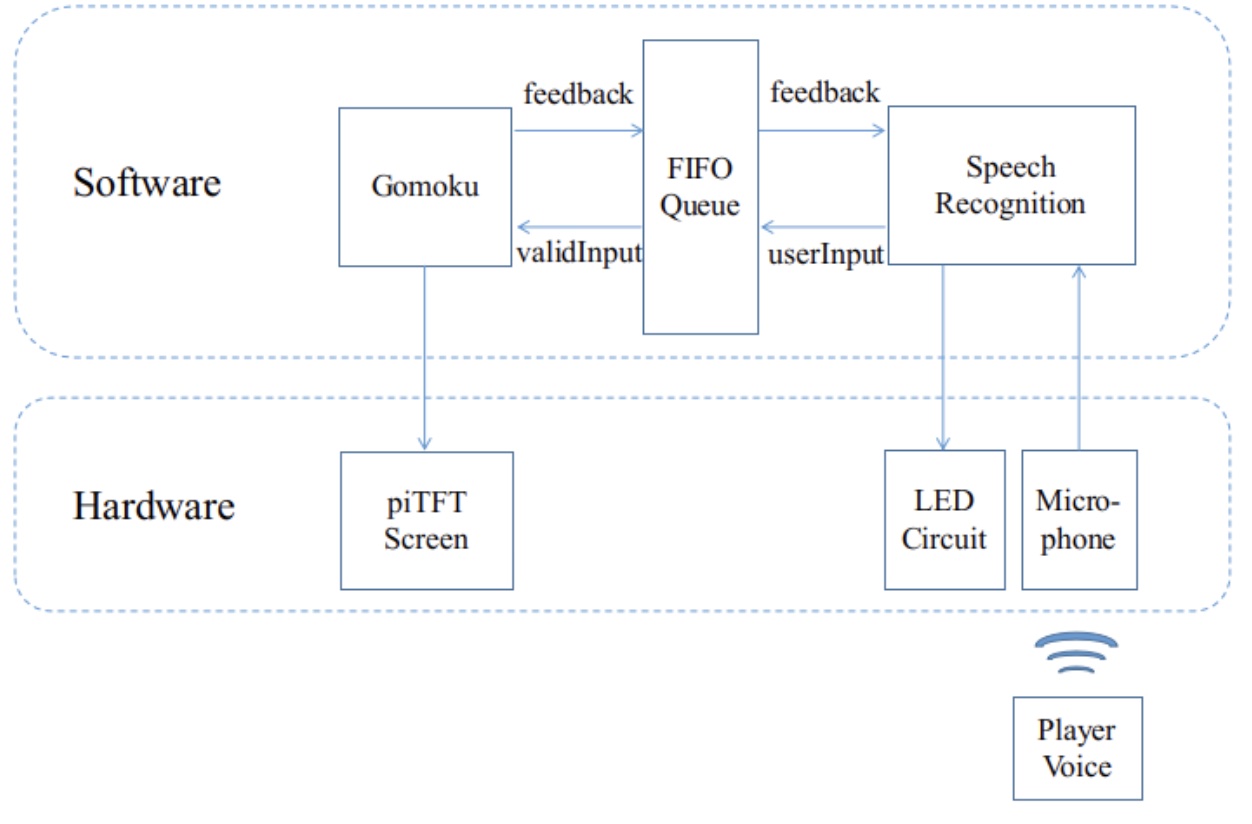

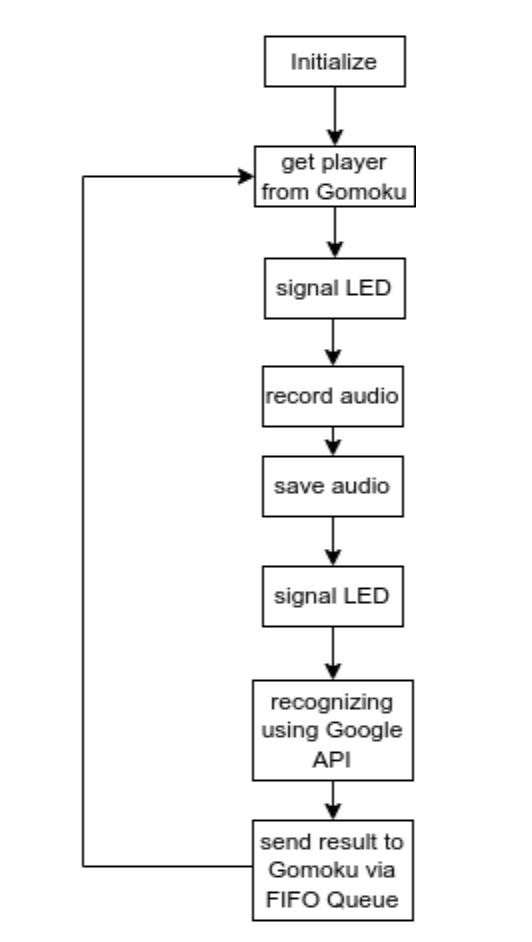

See Figure 1 for the whole design. This project can be decomposed into two major parts, software part and hardware part. The software part is in charge of the algorithm of recognizing human audio and the game logic, while the hardware part is responsible for collecting the user audio, showing the final game board and directing the user how to play.

Software Design

The software design is shown at the top of Figure 1. From the big picture side, the software design can be seen as the integration of three big modules. They are Gomoku, FIFO Queue, and Speech Recognition, from left to right in the Figure. These modules are responsible for different tasks in this project, which are easy to understand by their literal names. Among all of them, FIFO Queue acts as a communication bridge between Gomoku and Speech Recognition modules. The details of it will be unveiled in the later section.

During the development of this project, we make use of incremental design throughout the whole process. The Gomoku game is first implemented and can be played via keyboard input. We further implement the Speech Recognition part and are able to recognize the human voices with high accuracy. Eventually, these two parts are connected by the FIFO Queue module, which converts the raw user input into valid program input to the Gomoku interface and sends the feedback back to the Speech Recognition module.

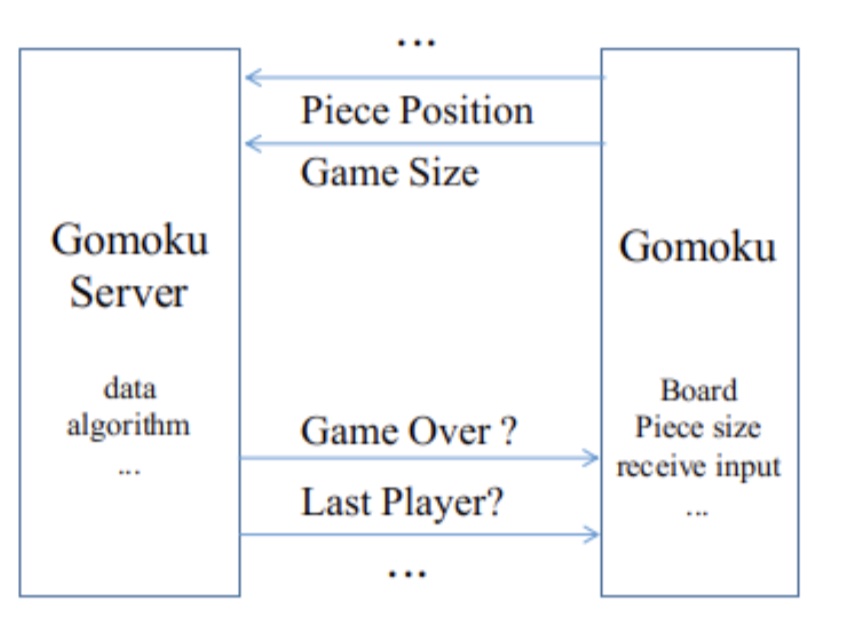

Gomoku

See Figure 2 for the high-level demonstration of Gomoku. The Gomoku game, which can later be shown on piTFT screen of our Raspberry Pi, is developed by using the pygame module. In order to separate the game data and GUI, we break the Gomoku module into two parts. One is the GomokuServer class, which includes methods to store the user input data and the algorithm to decide the winner. The other one is the Gomoku class, which is responsible for constructing the visible user interface, like a game board and game piece, and the communication interface for receiving valid input and sending feedback.

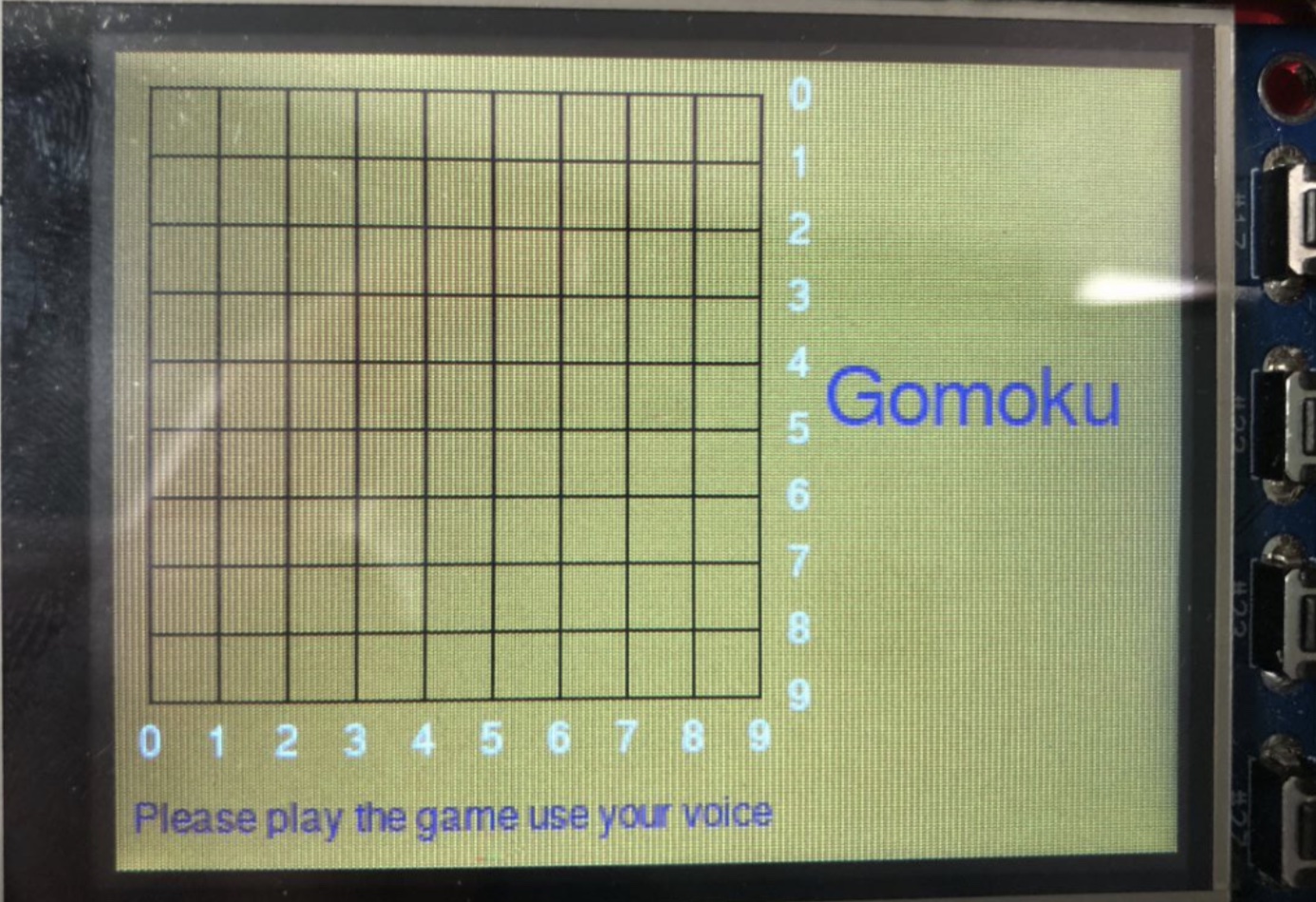

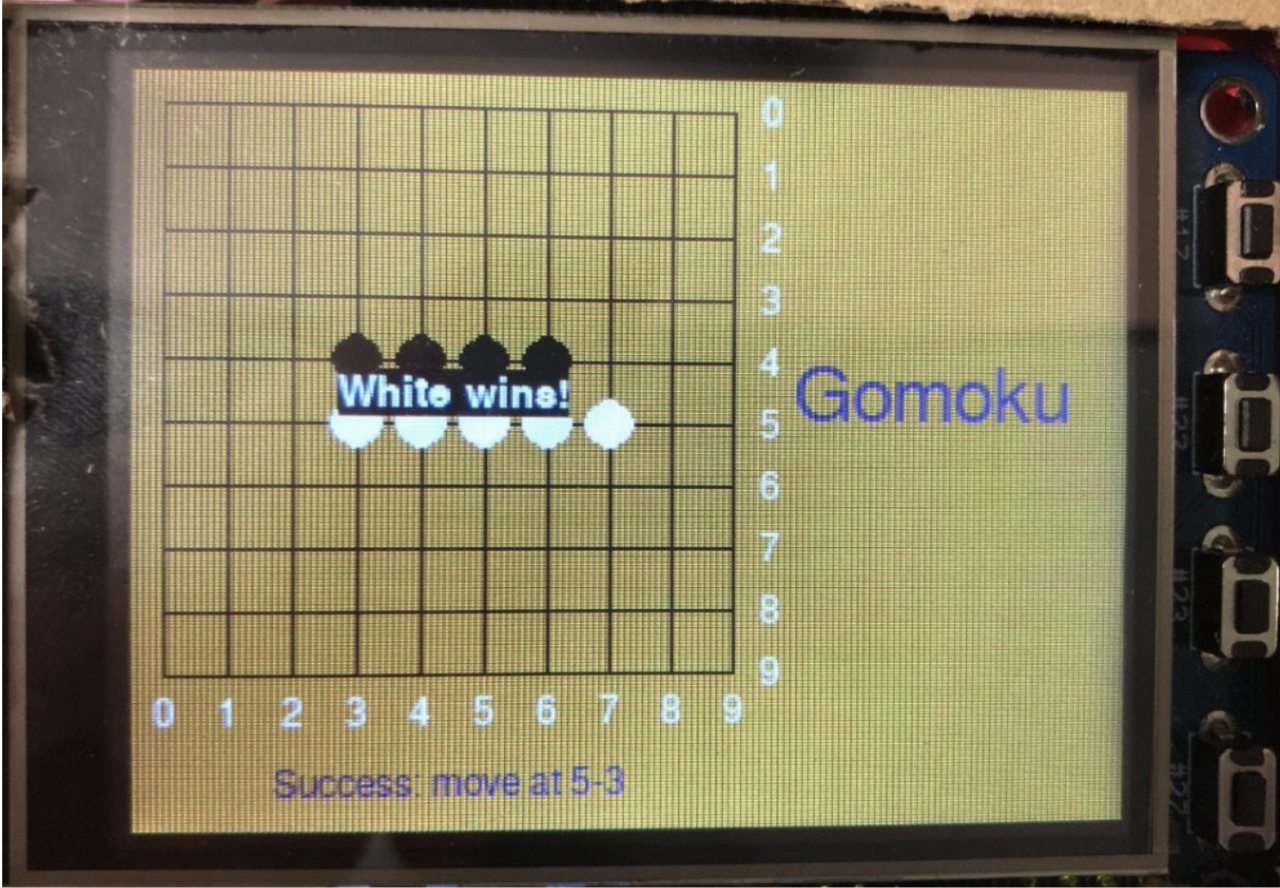

Specifically, we can use an example to explain the workflow of this Gomoku module. After the Gomoku module is run. The program will first instantiate a Gomoku object which initializes all states, like the board size and piece size. The board size and game rule can be customized by the users before the game. Basically, since the piTFT screen is relatively small, we set the board size with 10 rows and 10 cols. And then the play method will be called in this object. The play function first draws the empty game board, sends the initial state to speech recognition for lighting up the correct LED. See Figure 3 for the initial game board.

After that, the play method will enter into a main loop to deal with the user input. The loop logic is designed based on a FSM. There are three states in the FSM design: receive user input from the FIFO Queue module, handle the special commands and send feedback to the Speech Recognition through the FIFO Queue. The loop code is shown below.

# FSM, recieve->handle->send feedback->receive...

while not self.gomokuServer.gameOver() and not self.gomokuServer.isDraw():

# capture speech events, this part can be extracted as/in a class

isCMD_Valid, userRow, userCol, newMsg = self.inputHandler.getCommand()

# handle events

if self.handleSpecialCMD(newMsg) == "again": return

if(isCMD_Valid): self.makeMove(userRow, userCol)

self.hintMsg(newMsg.capitalize()) pygame.display.flip()

# send feedback to speechrecogition

self.sendLEDColors()

In the main loop, the program keeps updating the game board according to the input of users. After one of the players wins, the game board will end the game and show the outcome in the middle of the board. See Figure 4 for the outcome of a competition result.

In addition, the Gomoku class also defines a series of methods to deal with some special user inputs. The special user inputs are responsible for controlling the high-level control flows of the game. For example, after the game is over, the user can say “again” to replay the Gomoku game. After the code sees “again”, it will go into the corresponding handling function and execute suitable tasks. In this case, the code will reinitialize the Gomoku object and start the game again.

Speech Recognition

See Figure 5 for the control flow of the Speech Recognition module After the Gomoku game works, we build up the Speech Recognition module. The Speech Recognition module makes use of the speech_recognition package of Python to capture human audio and convert it into a literal string. The speech_recognition package relies on the pyaudio package, which can be used for recording the human voice and saving the voice data into a .wav file.

After obtaining the voice data, we use the Google API to process the voice data and get the recognized result. Since we make use of the Google Recognition engine, the Raspberry Pi must be connected to the Internet to get the online service of Google. In effect, there are several audio recognition engines that can be utilized via the speech_recognition Python package. We discard the offline options very quickly since we found that the offline engine needs a large of disk space to store the voice data set, which is unrealistic for our Raspberry Pi. We further explore several possible online solutions and finally find that Google API has the best performance.

FIFO Queue

After the completion of the above two modules, we need to connect them together wisely to make sure the whole system work. But here comes the tricky thing. The Gomoku module and the Speech Recognition module are two separate modules, which must be run at the same time, one for showing the game board in the foreground and another for recording the human voice in the background. In this case, we can’t just make use of the variable in one of these two programs since both of them have their own independent address space. They are two different processes! And this becomes a communication problem between the two programs.

In order to solve this communication problem, we decided to use FIFO as the communication bridge between the Gomoku module and the Speech Recognition module. The key idea is that FIFO can be seen as a queue. Every time the Gomoku module wants to get the user input from the Speech Recognition module tries to open a FIFO and waits until the Speech Recognition module sends user input into this FIFO. After something is put into the FIFO, the FIFO can be opened and the Gomoku module gets what it wants. This is the communication from the Speech Recognition module to the Gomoku module. As for the opposite direction, the principle is the same.

As an aside, the FIFO Queue is also responsible for the handling of the user input. Since we need to input the position of the piece to the Gomoku module, the rule of user input must be set. The format of user input is set as : [number] - [number]. For example, if one player wants to put one piece on the coordinate (1, 1), then he/she should say "one dash one". This voice message will be converted to the string “1-1” by the Speech Recognition module, then further parsed by the InputHandler to become two numbers: 1, 1, and finally fed into the Gomoku module as the integer input.

Hardware Design

See the second dotted frame in Figure 1 for the hardware part of this project.

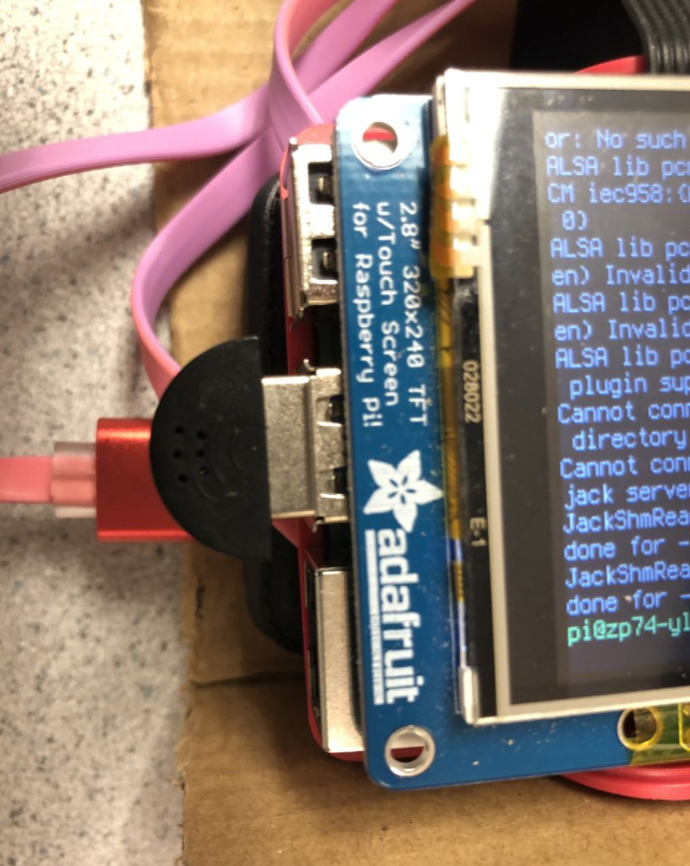

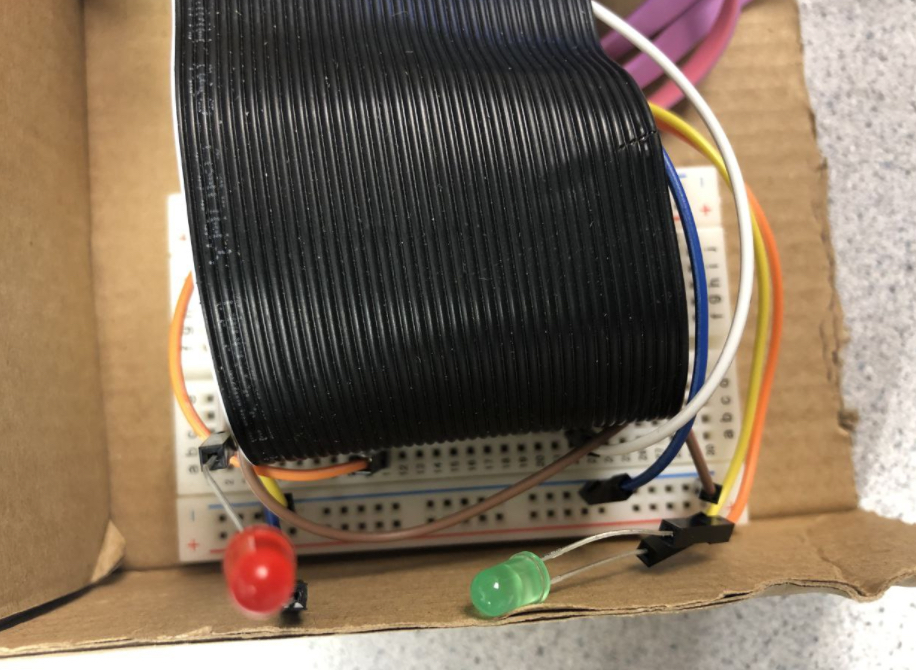

We integrate three hardware components, piTFT, microphone and LED circuit, to our Raspberry Pi, among which the USB microphone is used to collect the human voice and the LED circuit is used to indicate which player can play at a specific moment. The USB microphone is connected to the Raspberry Pi through the USB interface. We use GPIO 13 and 17 as the voltage output for two LEDS.

In addition, when the red LED lights up, it means that the player holding the white piece can make a move and when the green LED lights up, the player holding the black piece can make a move. The players should adjust their voice volume to make sure that the microphone can catch the input signal. Also, choosing a quiet environment to play the game is a good choice.

See Figure 6 for the USB microphone. See Figure 7 for the LED circuit.

Issues & solutions

During the development of this project, we met a lot of issues. We list all of them in the following:

- Empty FIFO content freezes the program

- Compatibility on piTFT - connect RPi without HDMI cable does not start the program properly

- Multiple same outputs in the terminal

- Error when installing pyaudio

- Error : cannot connect to server, jack control...

Solution: send none empty content to the FIFO, add empty check in the InputHandler class.

Solution: basically, this is because the Raspberry Pi only detects one hardware, piTFT, when the HDMI connected to a monitor is not connected to our Raspberry Pi. We can solve by setting the initial FB channel to fb0.

Solution: This is because our speech recognition program is running in the background. Sometimes this program will be triggered multiple times if one is not careful. Remember to kill all background speech recognition program before starting a new one.

Solution: use the command below to install

dependencies. Also check the

link

for details.

$ sudo apt install portaudio19-dev python3-pyaudio

Solution: ignore the warning. This error doesn’t have an influence on the speech recognition.

Result & Concolusion

In this project, we achieved most of our objectives even if there were some setbacks during the development. However, there was one feature that we failed to achieve as expected. For the speech recognition part, we designed to monitor the player’s voice in a real-time fashion, but the processing speed of speech recognition in real-time is disappointing on the Raspberry Pi. Therefore, we used an alternative solution to detect and recognize voice commands. We record the player’s voice for a short period of time, and then send the recording to the Google speech recognition engine for recognition and converting to letters. The processing speed was significantly improved but the fluency receiving speech command was sacrificed. Moreover, the accuracy of speech recognition may be unstable due to the player’s accent and background noise.

Future Work

In the future, if we have more time, we would like to explore and add the following functionalities:

- Redesign a real-time speech recognition system which does not achieved initially.

- A withdraw function will be added to the Gomoku game.

- Add AI with different level difficulties to the Gomoku game. Players can choose to play with AI or humans.

- Add a “cheat” function that tells players where is the optimal position of the next move.

- More voice-controlled games will be added to the Raspberry Pi.

Work Distribution

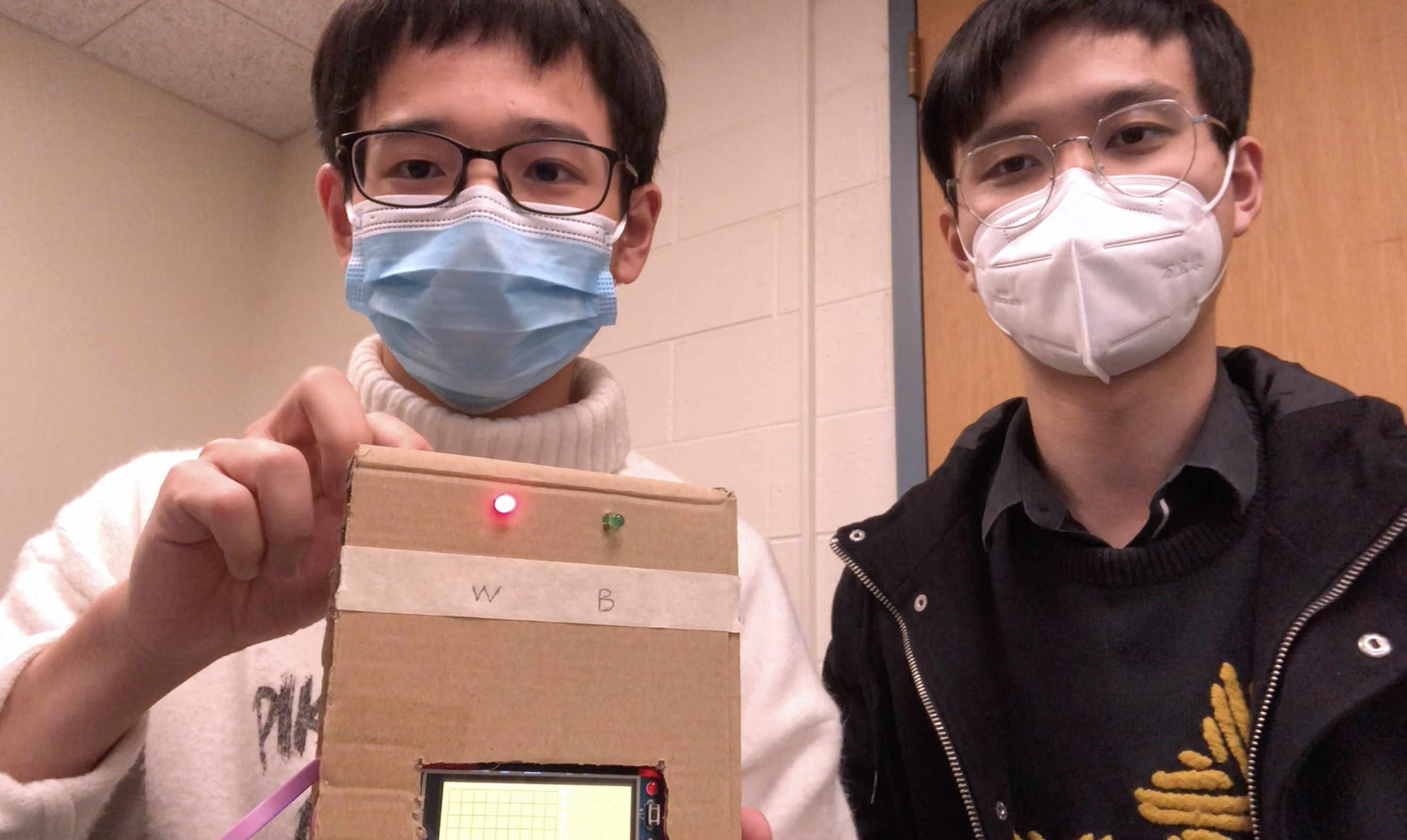

Project group picture

Yuhao Lu

yl3539@cornell.edu

Designed and implemented the speech recognition module of the software. Designed and built the LED circuit. Tested and debugged of all.

Zehua Pan

zp74@cornell.edu

Designed and implemented the Gomoku game module of the software and FIFO. Tested and debugged of all.

Parts List

- Mini USB Microphone - Provided in lab

- Raspberry Pi 4 - Provided in lab

Total: $0.00

References

PiDog, by Aryaa Vivek Pai and Krithik RanjanPyAudio, by Hubert Pham

SpeechRecognition, by Anthony Zhang and et al.

Pygame

Speech Recognition using Google Speech API and Python

Gomoku (Connect 5) game implementation in pygame